Ten years from now the whole idea of connecting to Wi-Fi will become a frictionless and painless experience.

Indien: Amazon startet verkauf von Mobiltelefone und Kameras

Why Hadoop projects fail — and how to make yours a success | VentureBeat

Report: How People Really Use Tablets While Watching TV | Co.Design: business + innovation + design

“Second screens need to feed someone’s curiosity casually, not greedily demand their spare attention.”

via Report: How People Really Use Tablets While Watching TV | Co.Design: business + innovation + design.

Algorithm Ethics

An algorithm is a structured description on how to calculate things. Some of the most prominent examples of algorithms have been around for more than 2500 years like Euklid’s algorithm that gives you the greatest common divisor or Erathostenes’ sieve to give you all prime numbers up to a given maximum. These two algorithms do not contain any kind of value judgement. If I define a new method for selecting prime numbers – and many of those have been publicized! – every algorithm will come to the same solution. A number is prime or not.

But there is a different kind of algorithmic processes, that is far more common in our daily life. These are algorithms that have been chosen to find a solution to some task, that others would probably have done in a different way. Although obvious value judgments done by calculation like credit scoring and rating immediately come to our mind, when we think about ethics in the context of calculations. However there is a multitude of “hidden” ethic algorithms that far more pervasive.

On example that I encountered was given by Gary Wolf on the Quantified Self Conference in Amsterdam. Wolf told of his experiment in taking different step-counting gadgets and analyzing the differing results. His conclusion: there is no common concept of what is defined as “a step”. And he is right. The developers of the different gadgets have arbitrarily chosen one or another method to map the data collected by the gadgets’ gyroscopic sensors into distinct steps to be counted.

So the first value judgment comes with choosing a method.

Many applications we use work on a fixed set of parameters – like the preselection of a mobile optimized CSS when the web server encounters what it takes for a mobile browser. Often we get the choice to switch to the “Web-mode”, but still there are many sites that would not allow our changing the view unless we trick the server into believing that our browser would be a “PC-version” and not mobile. This of course is a very simple example but the case should be clear: someone set a parameter without asking for our opinion.

The second way of having to deal with ethics is the setting of parameters.

A good example is given by Kraemer et. al in their paper. In medical imaging technologies like MRI, an image is calculated from data like tiny elecromagnetic distortions. Most doctors (I asked some explicitly) take these images as such (like they have taken photographs without much bothering about the underlying technology before). However, there are many parameters, that the developers of such an algorithmic imaging technology have predefined and that will effect the outcome in an important way. If a blood vessel is already clotted by arteriosclerosis or can be regarded still as healthy is a typical decision where we would like be on the safe side and thus tend to underestimate the volume of the vessel, i.e. prefer a more blurry image, while when a surgeon plans her cut, she might ask for a very sharp image that overestimates the vessel’s volume by trend.

The third value judgment is – as this illustrates – how to deal with uncertainty and misclassification.

This is what we call alpha and beta errors. Most people (especially in business context) concentrate on the alpha error, that is to minimize false positives. But when we take the cost of a misjudgement into account, the false negative often is much more expensive. Employers e.g. tend to look for “the perfect” candidate and by trend turn down applications that raise their doubts. By doing so, it is obvious that they will miss many opportunities for the best hire. The cost to fire someone that was hired under false expectations is far less than the cost of not having the chance in learning about someone at all – who might have been the hidden beauty.

The problem of the two types of errors is, you can’t optimize both simultaneously. So we have to make a decision. This is always a value judgment, always ethical.

With drones prepared for autonomous kill decisions this discussion becomes existential.

All three judgments – What method? What parameters? How to deal with misclassification? – are more often than not made implicitly. For many applications, the only way to understand these presumptions is to “open the black box” – hence to hack.

Given all that, I would like to demand three points of action:

– to the developers: you have to keep as many options open as possible and give others a chance in changing the presets (and customers: you must insist of this, when you order the programming of applications);

– to the educational systems: teach people to hack, to become curious about seeing behind things.

– to our legislative bodies: make hacking things legal. Don’t let copyright, DRM and the like being used against people who re-engineer things. Only what gets hacked, gets tested. Let us have sovereignty over the things we have to deal with, let us shape our surroundings according to our ethics.

Note

At the last re:pubica conference I gave a talk and hosted a discussion on “Algorithm ethics” that was recorded. (in German):

The Quantified Self

“For Quantified Self, ‘big data’ is more ‘near data’, data that surrounds us.”

Gary Wolf

Quantified Self can be viewed as taking action to reclaim the collection of personal data, not because of privacy but because of curiosity. Why not take the same approach that made Google, Amazon and the like so successful and use big data on yourself?

What self is there to be quantified?

What is the “me”? What is left, when we deconstruct what we are used to regard as “our self” into quanta? Is there a ghost in the shell? The idea of self-quantification implies an objective self that can be measured. With QS, the rather abstract outcomes of neuroscience or human genetics become tangible. The more we have quantitatively deconstructed us, the less is left for mind/body-dualism.

On est obligé d’ailleurs de confesser que la Perception et ce qui en dépend, est inexplicable par des raisons mécaniques.

G. W. Leibniz

As a Catholic, I was never fond that our Conscious Mind would just be a Mechanical Turk. As a mathematician, I feel deep satisfaction in seeing our world including my very own self becoming datarizable – Pythagoras was right, after all! This dialectic deconstruction of suspicious dualism and materialistic reductionism was discussed in three sessions I attended – Whitney Boesel’s “The missing trackers”, Sarah Watson’s “The self in data” and Natasha Schüll’s “Algorithmic Selfhood”.

“Quantifying yourself is like art: constructing a kind of expression.”

Robin Barooah

Many projects I saw at #qseu13 can be classified as art projects in their effort to find the right language to express the usually unexpresseble. But compared to most “classic” artists I know, the QS-apologetes are far less self-centered (sounds more contradictory than it is) and much more directed to in changing things by using data to find the sweetspot to set their levers.

What starts with counting your steps ends consequently in shaping yourself with technological means. Enhancing your bodily life with technology is the definition of becoming a Cyborg, as my friend Enno Park points out. Enno got Cochlea-implants to overcome his deafness. He now advocates for Cyborg rights – starting with his right to hack into his implants. Enno demands his right to tweak the technology that became part of his head.

Self-hacking will become as common as taking Aspirin to cure a headache. Even more: we will have to get literate in the quantification techniques to keep up with others that would anyway do it for us: biometric security systems, medical imaging and auto-diagnosis. To express ourselves with our data will become part of our communication culture as Social Media have today. So there will be not much of an alternative left for those who have doubts about quantifying themself. “The cost of abstention will drive people to QS.” as Whitney Boesel mentioned.

Data Humanities

In her remarkable talk at Strataconf, Kate Crawford warned us, that we should always suspect our “Big Data” sources as highly biased, since the standard tools of dealing with samples (as mentioned above) are usualy neglected when the data is collected.

Nevertheless, also the most biased data gives us valuable information – we just have to be careful with generalizing. Of course this is only relevant for data relating to humans using some kind of technology or service (like websites collecting cookie-data or people using some app on their phone). However, I am anyway much more interested in the humanities’ side of data: Data describing human behavior, data as an aditional dimension of people’s lives.

Taken all this, I suggest to call this field of behavior data “Data Humanities” rather than “Data Science”.

Prediction vs. Description or: Data Science vs. Market Research

“My market research indicates that 50% of your customers are above the median age. But the shocking discovery was that 50% were below the median age.”

(Dilbert; read it somewhere, cant remember the source)

It was funny to see everyone at O’Reilly’s Strata Conference talk about data science and hear just the dinosaurs like Microsoft, Intel or SAP still calling it “Big Data”. Now, for me, too, data science is the real change; and I tell you, why:

What always annoyed me when working with market researchers: you never get an answer. All you get is a description of the sample. Drawing samples was for sure a difficult task 50 years ago. You had to send interviews arround, using a kish grid (does anyone remember this – at least outside Germany?). The data had to be coded into punch cards and clumsy software was used to plot elementary descriptives from ascii-letters. If you still use SPSS, you might know what I am talking about. When I studied statistics in the early 90s, testing hypotheses was much more important than predictions, and visualisaton was not invented yet. The typical presentation of a market researcher would thus start with describing the sample (50% male, 25% from 20 to 39 years, etc.) and in the end, they would leave the client with some more or less trivialy aggregated Excel-Tables.

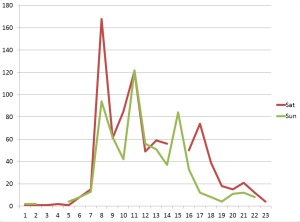

When I became in charge of pricing ad breaks of a large TV network, all this research was useless for my purposes. My job required predicting the measured audiences of each of the approximately 40 ad breaks for every of our four national stations six weeks in advance. I had to make the decission in real time, no matter how accurate the information I calculated the risks on would have been.

Market research is bad in supporting real time management decissions. So managers tend to decide on their “gut feelings”. But the framework has changed. The last decade brought to us the possibility to access huge data sets with low latency and run highly multivariate models. You cant do online advertising targeting based on gut feelings.

But most market researchers would still argue that the analytics behind ad targeting are not market research because they would just rely on probabilistic decissions, on predictions based on correlations rather than causality. Machine learning does not test a hypothesis that was derived from a theoretical construct of ideas. It identifies patterns and the prediction would be taken as accurate just if the effect on the ROI would be better then before.

I can very well live with the researchers keeping to their custom as long as I may use my data to do the predictions I need. When attending Strata Conference, I realized this deep paradigm shift from market research, describing data as its own end to data science, getting to predicitons.

Maybe it is thus a good thing to differentiate between market research and data science.

(This is the first in a row of posts on our impressions at Strata this year; the others will follow quickly …)

Why is there something like the Hype Cycle?

In computer science we have learned that we can do with non-linear models only in very unlikely examples. Not only our machines – also our minds are not capable to foresee non-linear developments. One of the achievements of Mandelbrot’s works and the ‘Chaos Theory’ is that we now better understand how this works and that we truly have no alternative.

You might have wondered, why the phenomenon of the Hype has such a distinct form, that consultancies like Gartner can even draw a curve – the famous “Garnter Hype Cycle of Emerging Technologies“. We will try to give a simple explanation.

Even if we don’t really believe the “best case”, it is wise to prepare for the changes, a “better case” would deliver. We start observing the market figures. We see that the new technology is quickly adopted by our peers (or those how we would love to be peer with …). We see that the new technology gets funding, a valuation that reflects the expected market potential but is effective today.

In reality, it is not that simple to produce and distribute novel technologies or services to mass markets. This requires more skills than just inventing it. There is usually some economy of scale in production and logistics, time to build business relationships and negotiate sales contracts.

Why do we find this sigmoid shape of the growth curve? First: the “hype” does normally not happen in the sales numbers of our technology; the “early adopters” are just too few to make a real impact. And after having said this: it is the law of decreasing marginal costs – every new piece is produced (or resp. sold) easier than the lots we had produced before. Just very shortly before hitting the ceiling of the market potential, we see a saturation – diminishing marginal profits when we “reach the plateau”.

We have experienced this with many industries during the last decades: the newspaper publishers – very early experimenting with the new, digital distribution but then completely failing to be ready when time was due; same with the phone makers (we will come to this example later), and we will see this happen again: electric cars, head-up displays, 3d-printing, market research, just to name a few. The astonishing fact is that all these disruptions have already taken place. It is just the linear projections and bad scenario planning that prevents us from taking the right decisions to cope with them.

Foresight: Scenarios vs. Strategies

Chess is a game that does not depend from chance. Every move can be exactly valuated mathematically, and in theory we can calculate the optimal strategy for both colors from any arbitrary position up to the end of the match.

Interestingly there is hardly any “intelligent” chess program. Almost everything that was coded during the last 40 years, solves the match with brute force: just calculating the results for almost every path for a few steps in advance and then choosing a move that is optimal in short term. This works, because the computer can crunch millions of variations after every move. However this can hardly be called strategic.

Regarding foresight in economy, culture, society, etc., we are used to scenarios: we play through all possible developments by changing on parameter at a time. Most prominent is the “Worst Case Scenario”, where we just put all controls of our model to the minimum.

Like in chess, we defeat apparently the most complex problems with this mindless computation. What we will never get are insights on disruptions, epochal changes, revolutions.

Disruptions occur at those points where the curve bends. Mathematically speaking, “bend” means, that the function that describes the development has no derivative at this point, thus it changes its direction spontaneously. If we imagine a car driving along the so far smooth curve, the driver will be caught in complete surprise by the bend in the track.

In reality, these bends almost never happen without some augury. Disruptions evolve by the transposition of processes. We might think of the processes as oscillations, like waves. Not every new wave that adds its influence to the development we have in focus, will cause a noticeable distortion. Many such processes tune into the main waves of the development unrecognized.

Critical are those distortions, new processes, that occur and start to build up with the existing development, like a feedback loop, to finally dominate the development completely.

The art of foresight is to identify exactly these waves that have the potential to build up and break through the system.

We will discuss some examples of this occasionally.